Between September 8–10, 2025, Google quietly removed the “&num=100” parameter from its search result URLs. With no warning and no supporting documentation, a shortcut that rank tracking tools relied on simply disappeared.

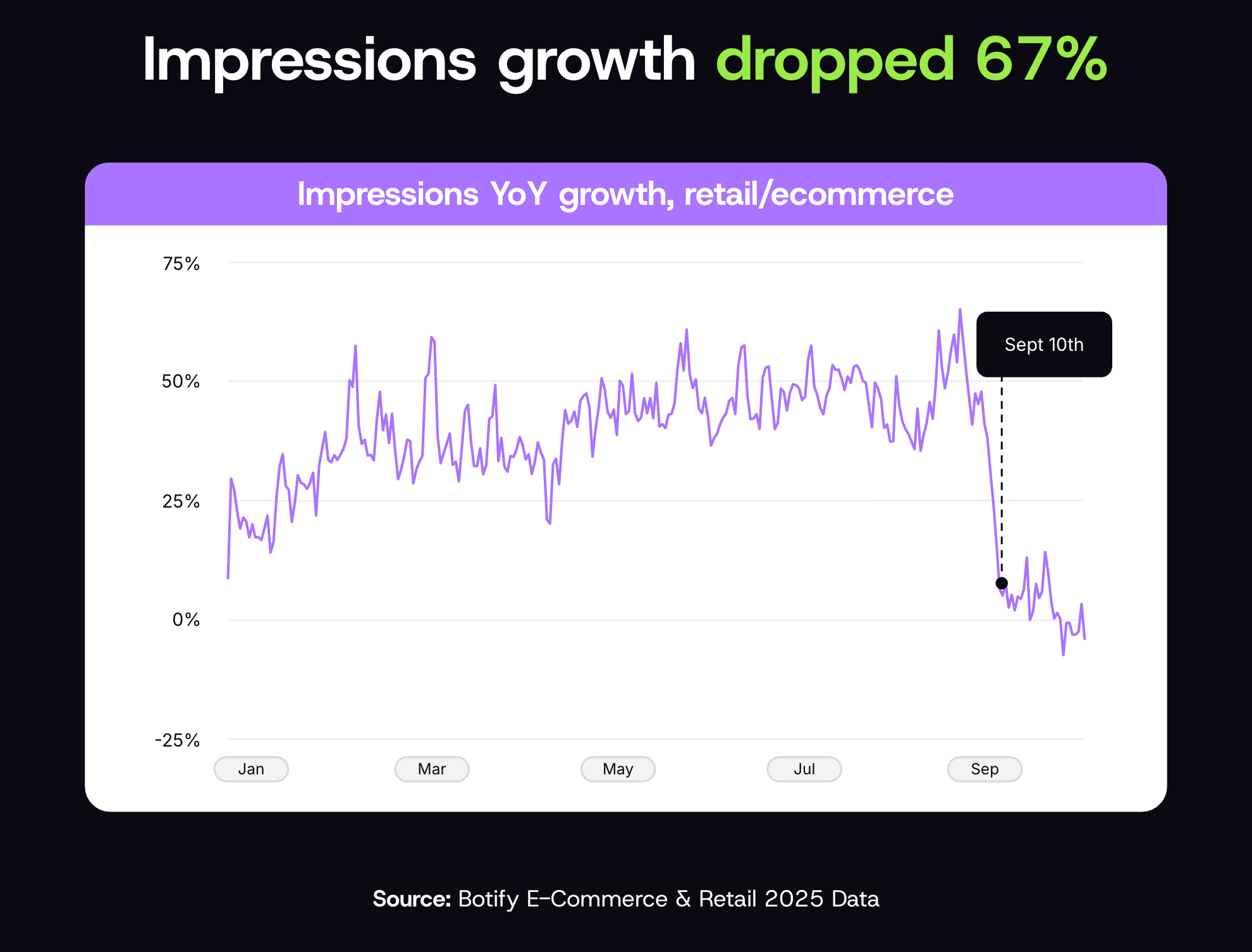

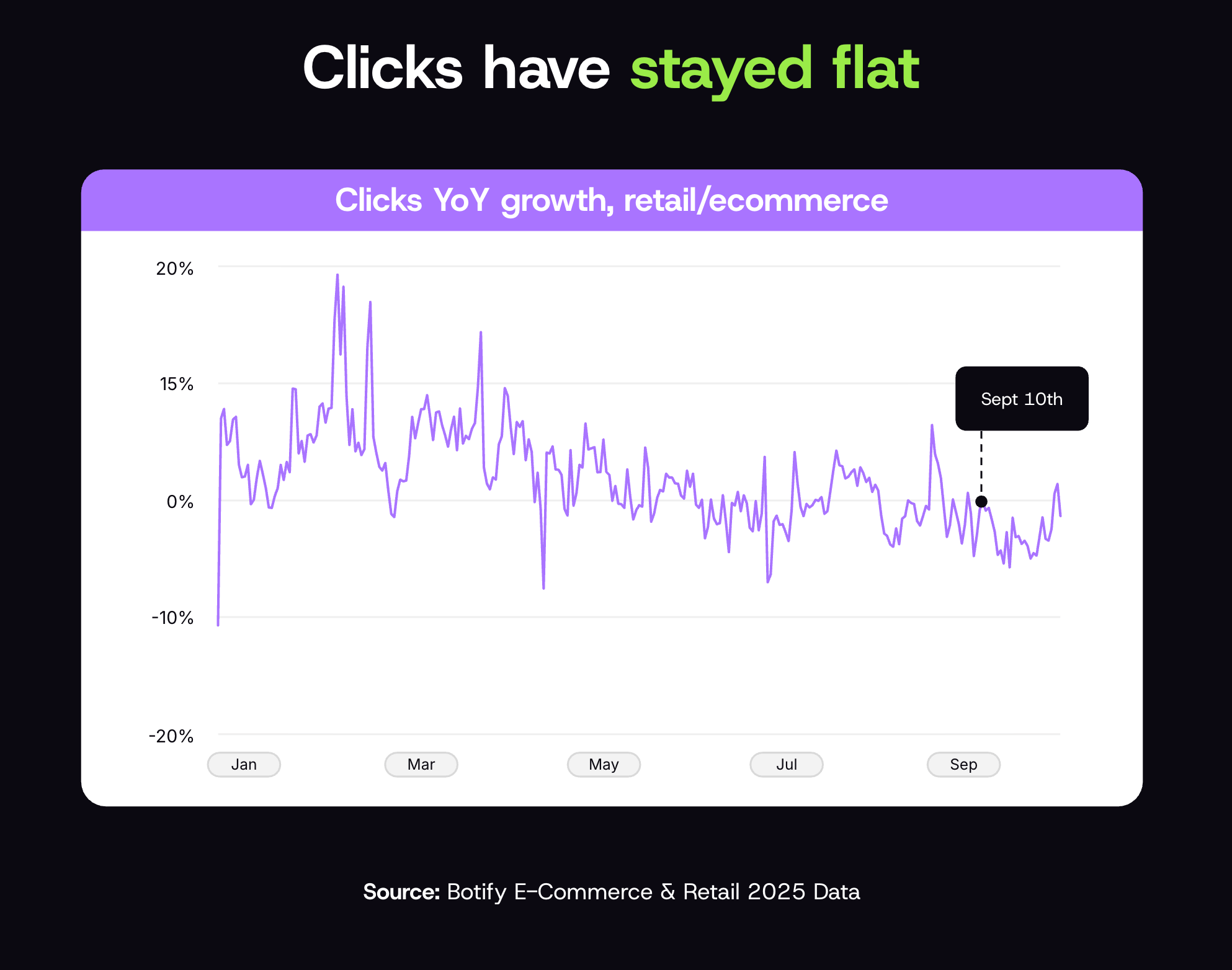

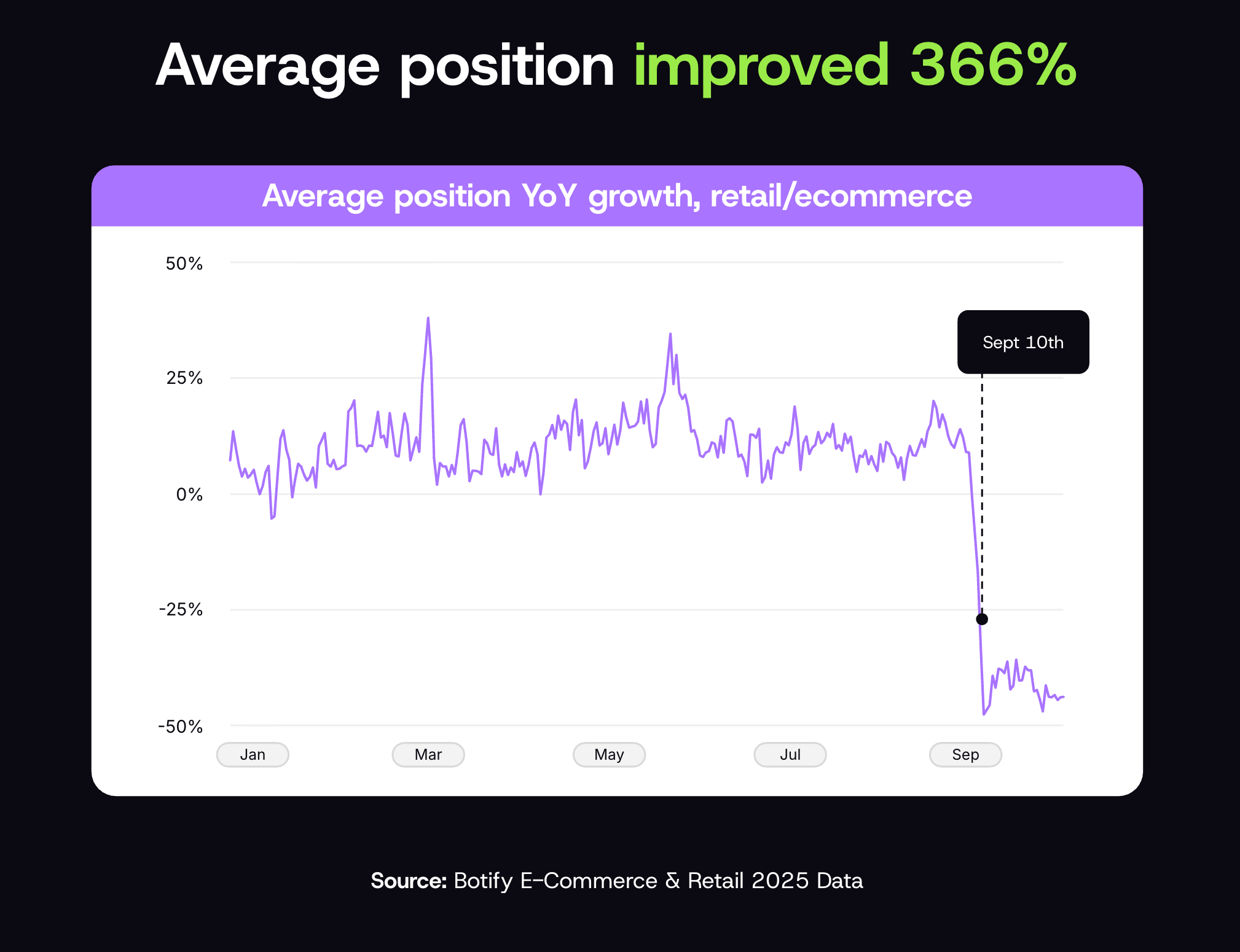

The fallout was swift. Across industries and geographies, Google Search Console (GSC) accounts showed a dramatic nosedive in impressions, while clicks remained relatively flat and average position actually improved.

We hypothesized that this reduction in impressions actually signals something deeper: that impressions data up to that point was inflated with noise from synthetic search activity. And our data suggests that’s the truth.

What happened when Google disabled &num=100?

The &num=100 parameter gave rank trackers and scrapers the ability to quickly pull the top 100 search results for a query at once. Removing it broke traditional rank tracking tools, taking away their method of efficiently populating top results. This change also affected other scrapers, such as the AI bots that use search results to contextualize LLM outputs.

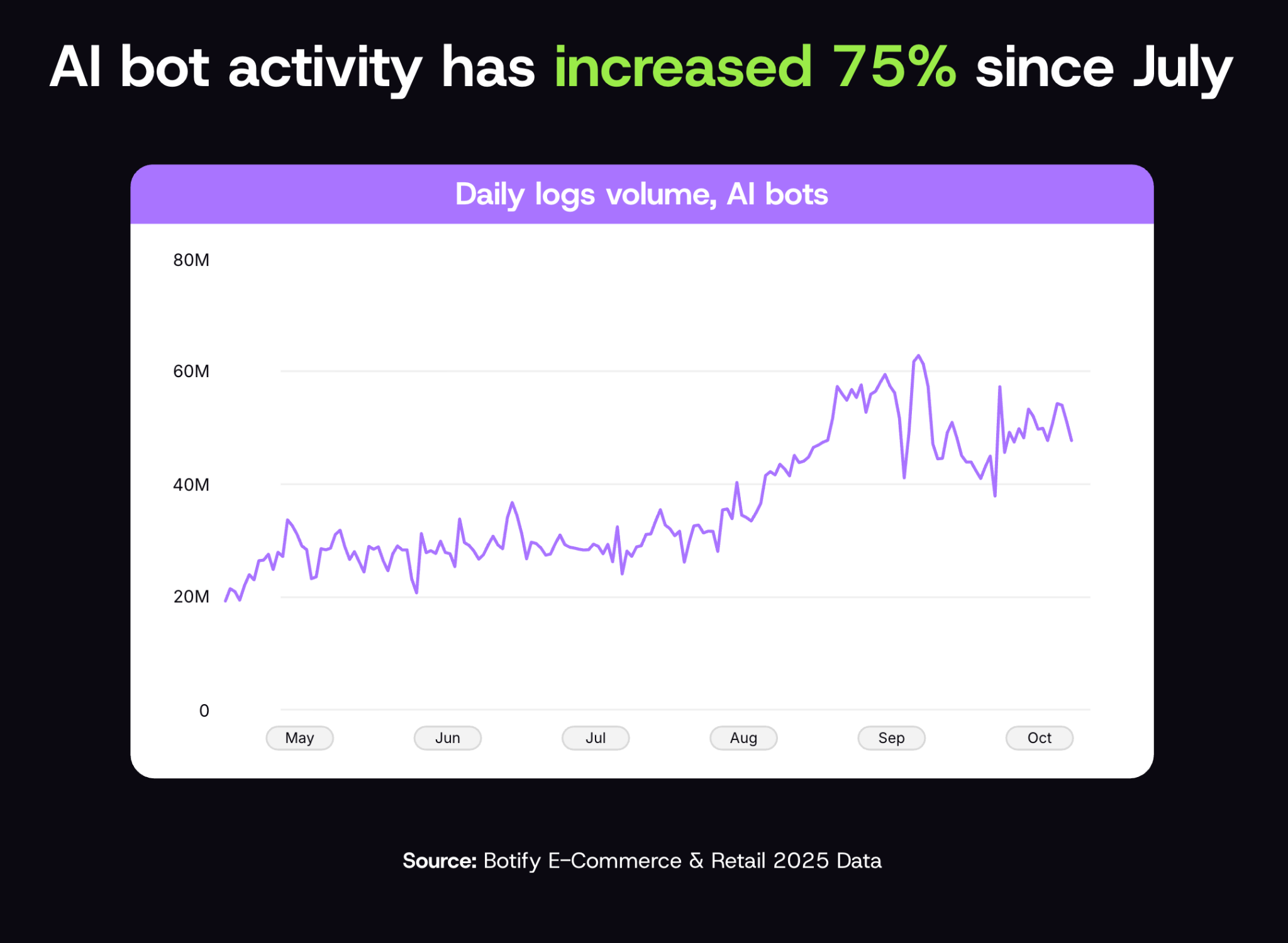

And that AI bot traffic is on the rise:

We’ve seen AI bot traffic data quadruple since the beginning of 2025, increasing 75% since July alone. This is likely due to the fact that AI platforms need to scrape the web for both LLM training data and for live retrieval (to answer queries outside training parameters in real time). As consumer use of these platforms increases, so does their need to scrape for even more information. Plus, these bots don’t make individual requests consecutively, like a human would. Research shows that they have the capacity to make over 100 scraping requests at a time, which ultimately contributes to impression data. By removing their ability to quickly push multiple requests for the top 100 search results, those artificial impressions go away.

While correlation doesn’t equal causation, with what we currently know about AI scrapers and agents, we can infer a strong causal relationship between the &num=100 parameter removal and the dip in overall impression growth.

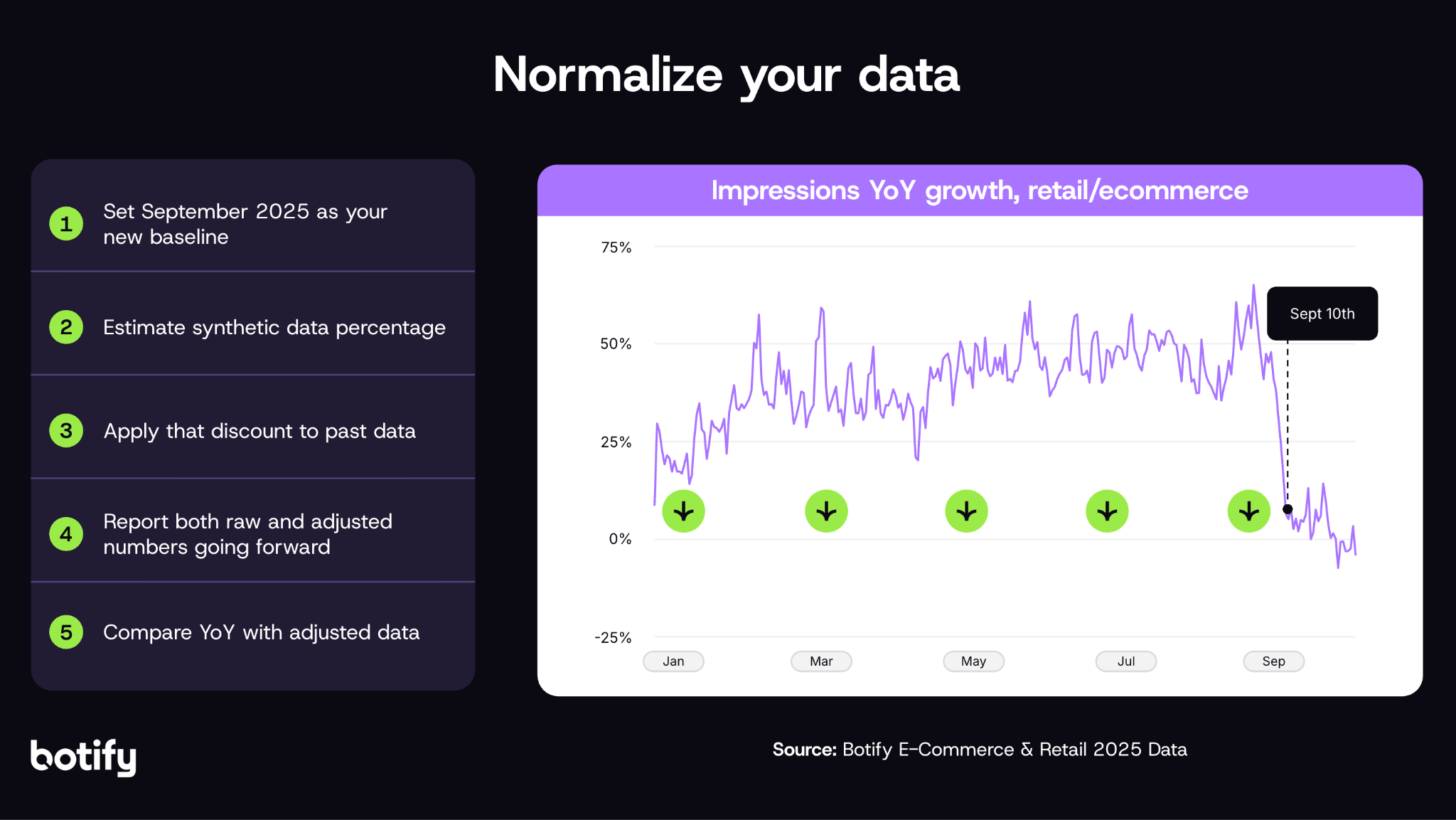

Looking at Botify’s client data, including several enterprise e-commerce brands, the pattern was unmistakable and vertical-agnostic:

- Growth in impressions collapsed around 67% simultaneously across verticals

- Click growth, a proxy for human engagement, stayed largely flat

- CTR growth improved ~150% as the removal of synthetic data emphasized human engagement

- Average position growth improved as impressions fell, because artificial impressions from lower-ranking positions (11–100) are no longer counted towards that average. This doesn’t mean your actual rankings improved, rather it clarifies where real consumers actually see your brand on SERPs.

By removing the parameter, Google has inadvertently revealed that much of the “growth” in impressions your brand may have been reporting wasn’t actually coming from potential customers. While impression growth was still positive YoY, it wasn’t as inflated as it initially seemed.

What does this mean for your brand?

Immediate implications

The first takeaway is that teams should resist the instinct to panic. A sudden drop in impressions on a dashboard normally signals a major loss in visibility. But in this case, the drop reflects the removal of synthetic activity rather than any real change in how people find your brand. In fact, because clicks remained steady and CTR improved, there is no evidence of reduced engagement from human users.

This also means that your historical data now needs to be treated with caution. For the past year, if your brand has been presenting data showing growth to leadership, those reports were at least partly inflated and will need recalibration.

A reasonable adjustment is to assume that impressions were overcounted by a certain percentage during 2024–2025, meaning that real visibility was flatter than it appeared. Annotating past reports with this caveat will help prevent misinterpretation when comparing year-over-year performance.

Strategic implications

There are also deeper consequences to consider here regarding how brands approach search analysis. Many teams pride themselves on being “data-driven,” but when the data itself is noisy, decisions based on it can be misguided. Treating impressions at face value without understanding their origin risks drawing conclusions that aren’t grounded in real consumer behavior.

Traditional rank tracking tools that claim to tell us exactly where a site stands have been steadily losing their utility in the world of AI search, where AI-augmented results and synthetic queries are increasing. As generative AI tools scale, they increasingly rely on search to update their outputs. Every query executed by a bot or agent contributes noise to the system, inflating metrics that once served as proxies for human activity. We should expect this dynamic to intensify, not fade.

How should your brand adjust measurements moving forward?

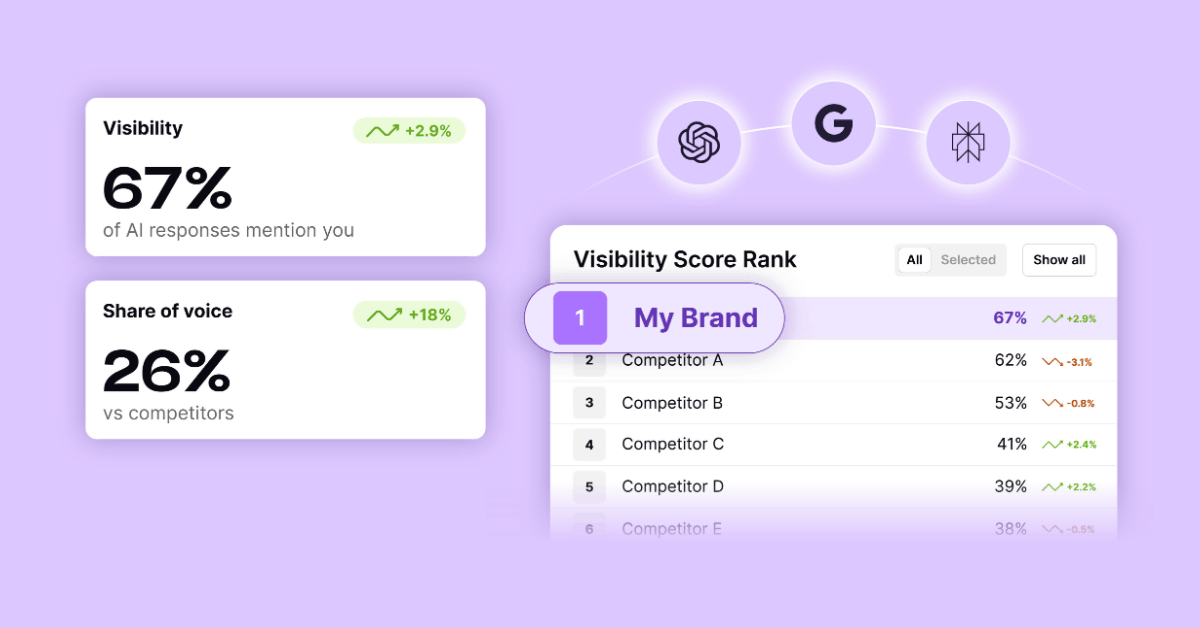

Rankings and impressions show a shrinking portion of the search experience. What matters more now is how often and in what context a brand surfaces.

In other words, the future of search success measurement is about visibility rather than rank.

For executives, this will require a change in perspective. Relying on impression-driven models of attribution and growth could leave performance estimates off by as much as 40–50%. In a competitive environment, that margin of error is unacceptable. Leadership teams need to start adapting now, not only by correcting historical baselines but also by preparing to evaluate success using new forms of visibility measurement.

Synthetic queries will certainly creep back into the data, but now, you know that traditional metrics like impressions are no longer reliable guides on their own. To move forward, measurement must evolve to focus on metrics that capture genuine human engagement and brand visibility in new AI-driven environments.

Recalibration: Annotate historical charts and establish a “synthetic discount factor” (0–2 months)

Impression-heavy models need to be recalibrated toward human-verified engagement. Annotate existing data to readjust your expectations:

- Mark September 2025 as the point where synthetic noise fell away, revealing real impressions numbers.

- Benchmark what percentage of impressions you believe to be synthetic (your synthetic discount factor).

- Apply your synthetic discount factor (likely 30–40%) to historical impressions.

- Begin dual reporting of raw GSC data vs. human-adjusted data.

- Normalize year-over-year trends with this adjustment.

Transitional metrics: Prepare for ongoing disruption (2–4 months)

Traditional SEO signals will continue to lose efficacy as AI search expands. Consider what other metrics are more reliable indications of success:

- Begin tracking visibility metrics such as presence rate, citation share, sentiment, journey coverage, and model coverage.

- Develop a basic understanding of the impact of AI search on your brand, especially at the top of the funnel. Where is your brand appearing in AI search results, and how?

- Don’t try to replace rank tracking with monitoring your presence in AI search. AI search visibility can’t be measured using deterministic rankings, because human interactions and AI responses will be highly personalized, variable, and stochastic.

AI-native measurement (4–12+ months)

We can expect AI tools to keep improving. There is also the potential for new AI standards to develop across the paid and organic search ecosystem, demanding transparency into AI data and interactions. This could spur platforms like ChatGPT to present dashboards similar to Google Search Console in the future.

Ideally, we’ll reach a point where traditional and AI search, chats, and feeds that are traditionally paid media will come together into AI-native reporting that connects visibility to revenue. This would follow the same pattern as other marketing innovations, such as social media.

The big picture

Every so often, the marketing industry gets an unfiltered look at how search really works. In 2006, AOL accidentally published anonymized search queries from hundreds of thousands of users. While it created a privacy scandal, the dataset reshaped SEO for years. It gave practitioners their first reliable model of click-through rates by position, revealing just how much consumer attention concentrated at the top of the results page.

This &num=100 parameter removal provides a similar moment of clarity by stripping away the layer of synthetic queries that had been inflating impression counts. For brands, the event exposed the noise in the machine. What looked like steady growth in impressions over the past year was not additional visibility, but rather automated activity layered on top of real traffic. If your team was using impressions as a proxy for brand visibility, decisions based on that data were made with distorted inputs.

At the same time, this glimpse of truth provides a new baseline for AI search success measurement. Just as the AOL leak gave the industry its first CTR models, the &num=100 change allows us to measure how much of the search landscape is real human activity and how much is artificial. That benchmark can help teams recalibrate historical data, set more realistic expectations for growth, and prepare for the ongoing shifts AI will bring. The future of measurement will depend on finding ways to distinguish human engagement from synthetic traffic and reframing success around visibility in an AI-driven landscape.

.svg)

.svg)

.svg)

.svg)