Top 5 Depth Issues… and SEO Solutions

Page depth in SEO… what is it?

In a website structure, a page’s depth is the number of clicks needed to reach that page from the homepage, using the shortest path. For instance, a page linked from the home page is at depth 1.

What is it not?

Not to be confused with the notion of page depth in Web Analytics tools, which is related to a user’s visit and the sequence of pages he saw during that visit: in that context, a page’s depth is the number of clicks after which the user reached the page.

Why should you care ?

Deep pages have a lower Pagerank (or none) and are less likely to be crawled by search engine robots. So robots are are less likely to discover them, and if they do, they are less likely to explore them again to check if they are still there and if they have changed. Of course being crawled regularly does not mean they will rank, but it’s a necessary starting point.

Bottom line, deep pages are less likely to rank.

For more information, check out our article “Is Page Depth a Ranking Factor?“

How do you know you have a depth problem?

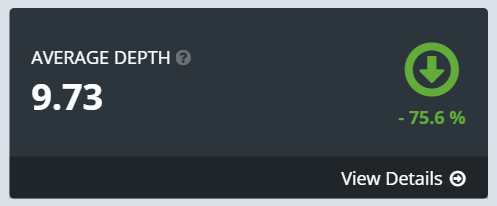

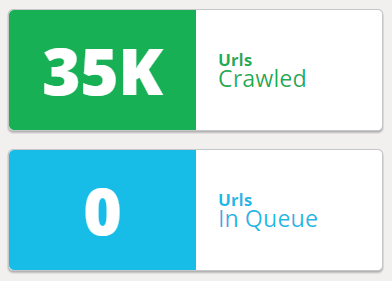

In the Botify Report:

- from the ‘average depth’ indicator, in the ‚ÄòDistribution’ tab

It should ideally be between 3 and 5, depending on your website’s size and structure. - from the shape of your global depth distribution graph

A typical distribution by depth has a bell shape – or truncated bell if the website was not fully crawled. Ideally the bell’s shape is high and narrow, and most of the website’s volume (top of the bell) is around depth 3 to 5. If the bell is quite flattened, and / or if there is a long tail on the right, with a gentle slope, there is a depth problem. - from the Suggested Patterns for deep URLs

If a few patterns correspond to the vast majority of deep URLs (as shown in the ‚Äò% all metrics’), the problem is already identifed. You can also see a depth distribution graph for that subset of URLs: click on the suggested pattern, click on ‚ÄòExplore all URLs”, and go to the depth tab.

A few example of global depth distribution graphs that show there is a problem:

Example #1:

Depths 11 and below were cumulated, but we can find out that the website extends down to 18 click deep: with a click on the upper right icon, we get a data table that we can order according to the depth column to see deepest pages first.

Example #2:

Ideally, there should be very few pages deeper than depth 5 or 6.

Note that depth distribution graphs also show indexable vs non-indexable pages, according to the most basic compliance criteria: read more about SEO indexable URLs.

So who are the culprits?

3) Tracking parameters in URLs

(click to go directly to details for each)

In the first example above, the depth problem happens to be caused by filters.

In the second example, the depth problem caused by malformed URLs, some of which create a perpetual link.

Let’s take a closer look at these main causes and see how you can start pinpointing and solving these problems.

And once you know what you should be looking for in priority, see how to find out which pages create excessive depth on your website.

1) Pagination

What?

Any long, paginated list. It gets worse with any combination of the following:

- Very long lists

- A low number of items per page

- A pagination scheme that doesn’t allow to click further than a few pages further down the list (apart from the last page). Imagine there are 50 pages and you can only move along the list 3 or 5 pages forward at a time: the list is already 10 to 16 clicks deep!

Where?

In lists that present the website’s primary content: products, articles, etc., or in other lists that don’t come immediately to mind, such as lists of user-generated content (products ratings, comments on articles)

How serious is it ?

a) Worst case scenario: some key content is too hard to reach, robots won’t see it!

That will be the case if these deep lists are the only path to some primary content (for instance, products that aren’t listed anywhere else, in any smaller list – an internal search box can help users find content, but it won’t help robots).

You will know if that’s the case by exploring the deepest pages in the URL explorer (click on the max depth in the depth graph) and checking if they include products pages (add a filter on the URL to match your product pages).

b) Some useless content is polluting your valuable content, you shouldn’t let robots see it!

Alternate scenario, these deep pages are mainly useless pages (useless for SEO):

- Pagination for long, hardly segmented lists (the “list all” type) that present products that are found elsewhere, in more qualified, shorter lists.

- Pagination that leads to content that is not key for organic traffic (such as user comments on articles).

A small part of that content may have some traffic potential, the question is how to detect this higher quality content (here are a couple of ideas for user-generated content: the fact that other users rated a comment as useful, the number of replies, a minimum length for comments…). Once identifed, it should be placed higher up in the website structure.

What should you do?

a) If deep lists are the only path to some important content, you will need to work on the website navigation structure: add finer categories, new types of filters (be careful with filters, see 2) ) etc. to create additional, shorter lists that – shazam! – will also create new SEO target pages for middle tail traffic.

You can also list more items per page, and in each page, link to more pages (not to the next 3 or 5 pages, but the next 10, plus multiples of 10 for instance).

Also check that you have added and tags to your pagination links. This won’t have any effect on depth, but it will help robots get the sequence of pages right.

b) If deep pages are mainly low-quality / useless pages,recommended actions depend on volumes and proportions:

Is there a lot of them, compared to your core, valuable content?

- Yes: you should make sure robots don’t go there. They are bad for your website’s image and, even if robots only crawl a small portion of deep content, these pages could end up wasting significant crawl resources that would be best used on valuable content.

- No: you can leave them be. The small number of deepest pages won’t be crawled much – but that’s precisely what you want in that case. You probably have higher priorities on your to-do list.

(return to top 5 causes for website depth)

2) Too many navigation filters

What?

If your website’s navigation includes filters which are accessible to robots, and if those filters can be combined at will, then robots keep finding new filters combinations as they find new pages, and this creates depth as well as a high volume of pages.

Where?

Any navigation scheme with multiple filters, that create new pages (as opposed to an Ajax refresh in the same page).

*How serious is it ? *

It can get serious quickly, as volume is exponential. Some filter combinations have organic traffic potential, but most don’t, especially those with many filters that generate most of the volume.

*What should you do? *

The best practice is to limit systematic links to filtered lists (crawlable by robots) to a single filter, 2 filters at the same time at most. For instance, if you have a clothing store with filters by type / brand / color, you can allow systematic combination of 2 filters. In this case, type + brand (jeans + diesel), color + type color (red + dress) or brand + color (UGG + yellow). If you know that a combination of more filters has traffic potential (‚Äòblack’+ ‚ÄòGStar’ ‚Äòt-shirt’), a link can be added manually, but we don’t want all combinations of 3 filters (that would create Armani + boots + yellow, which probably has no traffic potential and could well be an empty list).

(return to top 5 causes for website depth)

3) Tracking parameters in URLs

What?

A tracking parameter (such as ‚Äò?source=thispage’) that is added to the URL to reflect the user’s navigation. This can create a huge number of URLs (all combinations: all pages the user can come from, for each tracked page), or even an infinite number if several parameters can be combined and the full path is tracked (it’s then a spider trap).

Where?

For instance, in a “similar products” or a “related stories” block, where links to other products or articles include a parameter to track which page the user was coming from (not to be confused with parameters that track ads or email campaigns: here, we’re talking about systematic on-site tracking of internal links)

*How serious is it ? *

Very serious if many pages are tracked. The tracking parameters create a large amount of duplicates of important pages (otherwise you wouldn’t want to track them!).

*What should you do? *

Transmit the tracking information behind a ‚Äò#’ at the end of the URL, where it won’t change the URL (and not as a URL parameter behind a ‚Äò?’, which is part of the URL). Redirect URLs with tracking parameters to the version of the URL without tracking.

(return to top 5 causes for website depth)

4) Malformed URLs

What?

Some pages include malformed links which create new pages when they should link to existing pages.

Malformed links often return an 404 HTTP status code (Not Found). But it is also quite common for a malformed URL to return a page that appears to be normal to the user: typically, in this case, malformed links still include an identifier which is used to populate the page content.

Most common problems include :

- a missing human-readable element in the URL

for instance http://www.mywebsite.com/products/_id123456.html

instead of http://www.mywebsite.com/products/product-description_id123456.html - repeated elements

for instance

http://www.mywebsite.com/products/products/product-description_id123456.html

Where?

Potentially anywhere on the website.

*How serious is it ? *

It depends on the volume. It is worse when malformed URLs return an HTTP 200 (OK) status code, because then there is a potentially large number of duplicate pages, most probably duplicates of key content.

*What should you do? *

Replace malformed links by correct links on the website.

Redirect malformed URL should to the correct URL (HTTP 301 permanent redirection) or return a 404 HTTP status code (Not Found), as robots will continue crawling them for a while.

(return to top 5 causes for website depth)

5) A perpetual link

What?

Any link that is present on every page for a given template and always creates a new URL

Where?

The textbook example is ‚Äònext day’ or ‚Äònext month’ in a calendar. Some malformed URLs also have also the same effect (a repeated element that is added again to the URL in each new page).

The impact on the website structure is similar as that of pagination, only worse: you can only go forward one page at a time and there is no end.

*How serious is it ? *

It depends on the volume (do these pages create new content pages as well?), and whether the template these pages are based on corresponds to important pages or not.

*What should you do? *

For a perpetual ‚Äònext’ button: implement an ‚Äòend’ value that makes sense for your website (in the calendar example, that would be the last day that has events, and it would be updated with each calendar content update).

For a malformed URL, see 4).

(return to top 5 causes for website depth)