Performance Really Matters for SEO

For some websites, improving performance may actually be the optimization that would have the biggest impact on organic traffic. For others, it may not be the #1 SEO issue, but it’s still probably in the top 5.

Being crawled is the first step to be indexed…. and visited through Google. What if a portion of your website was totally unknown to Google, simply because the search engine doesn’t have the time to explore it?

Luckily, there is something you can do: improve performance, and it will almost mechanically increase Google’s crawl volume.

If Google gets your pages faster, it will be able to get more pages within the same amount of time. That’s great news, because the time allocated to crawl your website (or crawl budget) is not likely to evolve overnight. It is largely based on a number of criteria linked to overall popularity (linked to notions of pagerank and external linking, authority, history etc. that take time to build up), as well as content quality and amount.

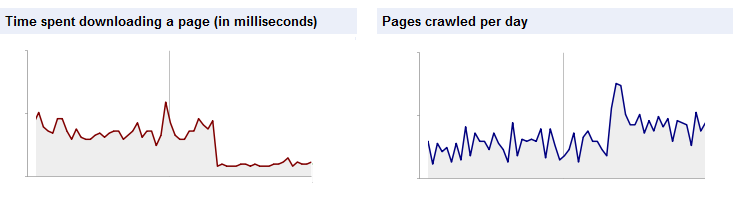

In terms of response time, users and robots don’t have the same experience of the same page. The graphs above are from Google Webmaster Tools, which show performance experienced from robots’ perspective (in the ‚ÄòCrawl / Crawl stats’ section): that’s the download time for the html page without associated resources, such as images etc.

Need orders of magnitude to know when to be happy and when to worry? Here are some pointers:

The objective could be an average download time under 800 ms, or under 500 ms depending on where you start from.

- Over 1000 ms: not good

- Under 1000 ms : okay

- Under 500 ms : excellent

What about performance from a user’s perspective?

It’s almost a different topic. It also matters a lot, as user experience has become one of the top ranking factors. But it matters for pages that are visited by a significant number of users, for which Google will have a large amount of signals. That’s a much smaller number of pages than overall crawled pages, and more importantly that’s the download time for the full rendering, which involves other types of optimizations.

What can you do to improve crawl performance?

Session management

Most websites treat robots and users the same way. But when a request comes from a robot, why spend time and energy on things that are meant for real users? The typical example is session information management. Why would you create a session for a robot? If you don’t, you will deliver pages faster, and it won’t make any difference to the robot.

And then… where to start

Other performance optimization are typically related to code and infrastructure, and are very specific to each website. The first question is where to start. The thing is, GWT provides average values, and different page templates probably have very different download times. And some are more critical for your organic traffic, or your traffic in general.

*So where precisely is there room for improvement? Which page templates are worth focusing on? *

That’s where Botify comes into play. The SEO analysis‘s report provides:

- A global view of your website’s performance, with distribution details (how much good and not so good results)

[The screenshot has been updated to show the new Botify interface]

- Information to locate slowest pages, with Suggested Filters that help locate pages with lowest performance

[The screenshot has been updated to show the new Botify interface]

- Any additional metric, to validate which pages are worth focusing on (exclude deepest pages for instance). This is done in the URL Explorer, where we can get the full list of URLs matching a pattern, and export data (the default table already includes URL and download time).

A special optimization that works like magic

Most content doesn’t change much once published, or doesn’t change at all. We can avoid delivering the same content over and over again to Google when it hasn’t changed. That’s what the HTTP 304 status code is for. It means ‚Äònot modified’, in response to an HTTP request including a ‚Äòif modified since’ option, which Google does use. More on this soon, it deserves its own post.

Beware: some optimizations are good for users but not for robots

Some optimizations that work great for users won’t be so great for robots, simply because they are based on expected behavior, and robots don’t act like users. The best example is that of CDNs (Content Delivery Network, such as Akamai)

A CDN will have local caches for his customer’s website, in many places around the world, each containing the most queried pages in that part of the world. It is meant to ensure better user experience, and will retain copies of pages that receive most visits. What search engine’s robots do, on the other hand, is go almost everywhere (or anywhere) on the website, and they certainly go places where users seldom go.

That means search engine’s robots will often trigger cache misses. The CDN will have to get the page at the source (the site’s web server) before being able to deliver the page. Which will take more time than it would have, had the robot requested the page directly to the web server – and the robot could happen to be halfway across the planet, anyway, so even with a cache hit, the gain is not that obvious. So CDNs don’t do much for robots.

We are not saying this to recommend avoiding CDNs (they obviously serve a purpose and serve it well). We are merely suggesting to use caution when thinking ‚Äòperformance optimization’.

Think ‚Äòbots’

From the examples we have covered, it becomes clear that there are:

- Optimizations for users (like CDNs),

- Optimizations for robots (like not storing session information),

- Optimizations for all (like responding with a 304 status code to a ‚Äòif-modified-since’ HTTP request or optimizing the HTML code)

Not to be confused.