The mysterious algorithm that rules how and when your site shows up in search results may never be truly understood (if only because it’s always changing), but Googlebot does leave clues behind wherever it goes. Whenever a bot has visited your site and crawled a page – or several thousand pages – it leaves a mark. It’s up to you to follow this trail to find out where the bots have been – and where they haven’t. What you do next will determine your SEO success.

Crawls & Visits: Putting the pieces together

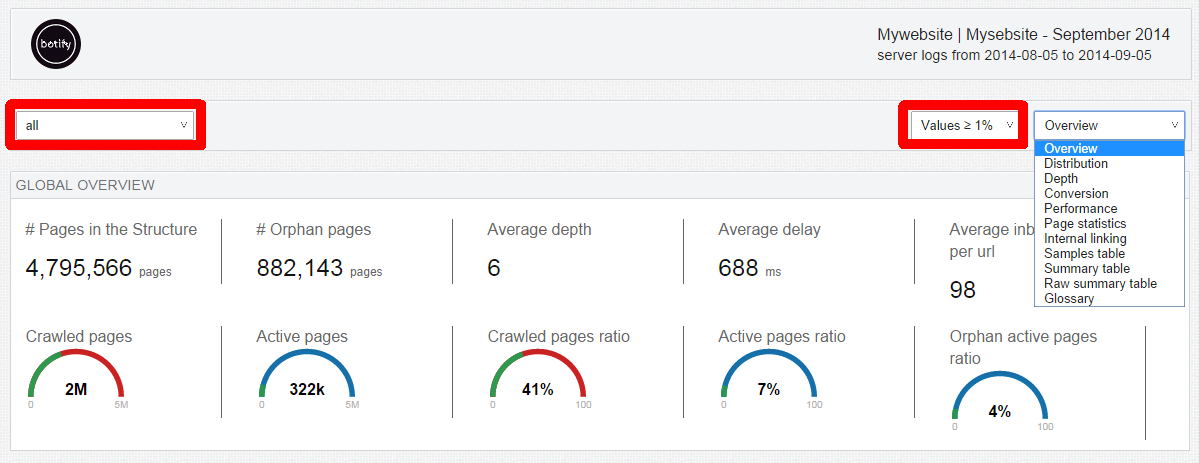

What pages of your website have actually been crawled by Google? Which haven’t? Critical pages may be left uncrawled, while others that have been crawled may be useless to your SEO.

With log analysis, you find the answers to 2 important questions:

- Which pages have been crawled by Googlebot?

- Which pages are bringing in organic traffic?

Essentially, you get visibility into the differences between crawls and visits.

Why is this important? Well, without insight into your web server’s records, you would never know if 50% of your crawled pages were driving almost no SEO traffic, while your critical SEO content goes uncrawled.

Once you see what’s happening on your site, achieving a 100% crawl rate (the pages Googlebot sees versus the total pages in your site) should be at the top of your to-do list to ensure that your critical categories and content are receiving the crawls – and organic visits – they deserve.

Building internal connections

Lincoln Logs Photo via Amazon.com

If Google (or Bing, or Yahoo) doesn’t see parts of your website, customers won’t see them either. A critical part of connecting bots with each page of your site rests in building your internal linking structure to lead users (and bots) from one page to the next, rather than sending them out into the wild.

How do you improve your internal linking in a way that’s meaningful for your SEO?

- Relink or block orphan pages

- Eliminate useless pages

- Update your sitemap(s)

Save the orphans! No, really; it’s important to rescue your orphan URLs so that both bots and customers can navigate flawlessly through your website. What’s an orphan URL? Orphan pages (or URLs) are pages that are not linked within your site. Googlebot can continue to crawl them if they appear in sitemaps or are linked from other websites, but they are inaccessible within the normal flow of your website to a typical user. Ultimately, they’re a waste of good crawl budget since they send both bots and customers to a dead end and don’t pass authority. Relink them with the rest of your site so that Googlebot can find its way there and back and customers have a call to action to explore further instead of bouncing off to some other site.

Next, identify which pages of your site are not useful for SEO. Are they being crawled? Are they outdated? Eliminate them. Your web server logs allow you to see which pages are crawled by bots every day, and compare that information with your organic traffic. If pages are being crawled that are ineffective and not driving any meaningful SEO traffic, it’s time to let them go to make room for pages that are worthwhile and far more likely to drive traffic (and revenue). Take action to either eliminate the pages completely or block Google from crawling them if they offer no SEO value.

Your sitemap file is a handy roadmap of your most important pages for Googlebot to find the best route through your website. Make the most of it! Make sure your sitemap includes only critical pages with strategic content and categories, so Google can spend its resources wisely on pages that matter. Don’t waste your sitemap file on pages that are useless to your SEO, are not linked in your site, or have been eliminated (see above).

Control the Crawl

Knowledge is power, and thanks to your log analysis you have the power to change not only how Google sees your site, but how it appears in search results and how new customers find you.

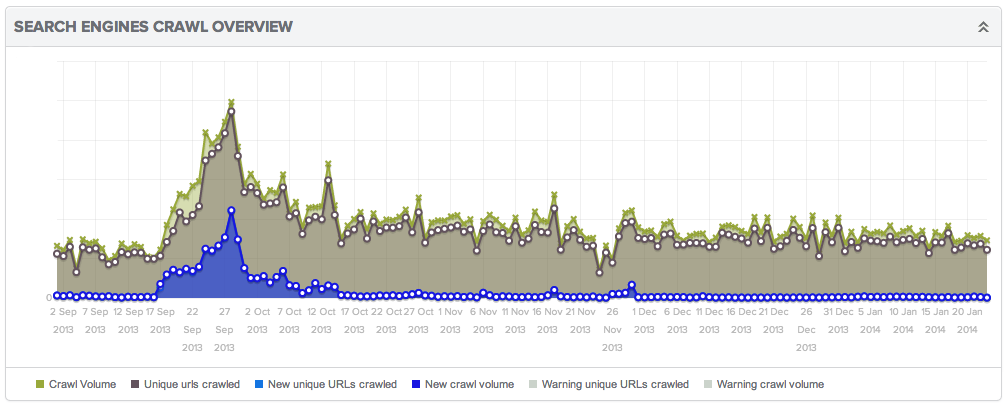

With server logs updated daily to reflect all the traffic and bots that have visited your site, you can constantly monitor the performance of your website. Witness from one day to the next a precise list of new pages found by Googlebot. Seeing real data gives you proof of pages’ efficiency and insights to take action and effect real change for your SEO.

Do you know how Google sees your website? Find out now with Botify’s LogAnalyzer!