In a previous article we talked about how to understand faceted navigation and why it occurs. If you’ve read that and you’re here, you’ve probably identified that you need to fix your facets as they are currently causing issues. If you haven’t yet, do check out the previous article to understand this first, before reading further. Now we will go through the methods and pitfalls and we will also give you guidance on how to fix it.

Common Facet Handling Methods & Potential Pitfalls

When it comes to handling faceted navigation, there are a number of tools we can use and the best approach is to use a combination of these in order to achieve the best results. Every site is different which means that there isn’t a ‘one size fits all’ solution to this. Make sure you understand these considerations before you start making changes to your robots.txt or ask your developers to implement noindex and nofollow tags.

Let’s go over some of the most common tools you can use to restrict search engine bots from crawling or indexing your pages, and what you need to be careful about.

Noindex

It’s important to understand that noindex meta tags send the signal not to index the page, but don’t stop the bots from crawling the page so therefore will not solve the problem of crawl budget waste; you still risk diluting your internal pagerank. It’s also useful to keep in mind that it can take some time for Google to re-index a page when the noindex tag is removed, so make sure to use this as a long term consideration.

PRO Tip: Use noindex on low inventory pages. If the inventory is likely to change, make sure to regularly create a sitemap of the URLs whose noindex/index status has changed.

Nofollow

Nofollowing links from your navigation to faceted URLs will help you solve your crawl budget issue, however these pages can still get indexed if they have backlinks, links from articles, or were indexed previously. This can still lead to duplicate content and internal pagerank distribution.

PRO Tip: Make sure to combine nofollows with other tactics to limit discoverability.

Robots.txt

Robots.txt will completely limit crawlers to access faceted pages, which resolves most issues, however, if you haven’t considered search demand or inventory and these pages have high value links, you could potentially lose external link equity. Another consideration is that you might limit crawl for pages that have already been indexed or have follow links from other pages, which would mean that these can still appear in Google’s index.

PRO Tip: Block pages that have been removed from Google’s index. You can use Google’s removal tool for individual URLs or create a sitemap with all pages that have updated ‘noindex’ meta tags. Make sure to remove this sitemap once noindexing is complete. Only block pages that have no search demand and are creating waste to your crawl budget therefore preventing crawlers to access much more valuable pages.

Canonicals

Canonicals can be easy to use and seem like a good way to resolve duplicate content while passing on link equity. The main issue is when these are not used on exact duplicate pages, which can cause search engines to ignore your canonical tags.

PRO Tip: Only use canonicals on exact and high similarity duplicates, if you want to keep faceted pages with unique content, use self-referencing canonicals.

JavaScript (JS)

JS can be used to filter or sort faceted content, but it is one of the most development-intensive ways. It can also make it difficult for users to share links or navigate if the URL does not update with user interactions.

PRO Tip: Add parameters to the URLs using JavaScript to make sure your faceted navigation is both search engine and user-friendly. This way your URLs will still be shareable.

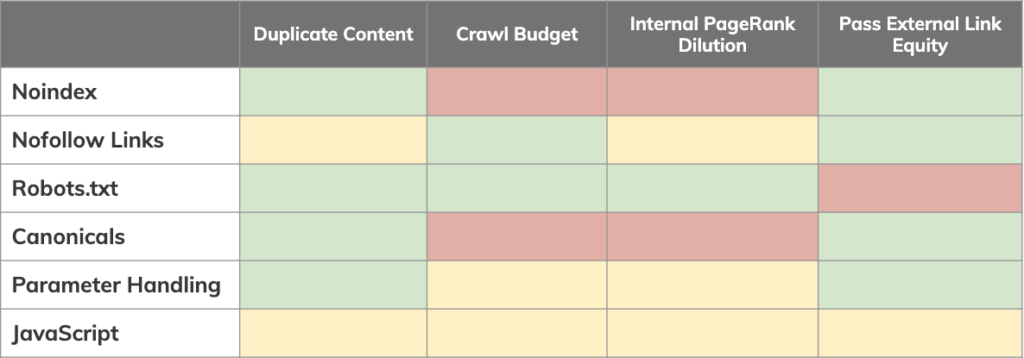

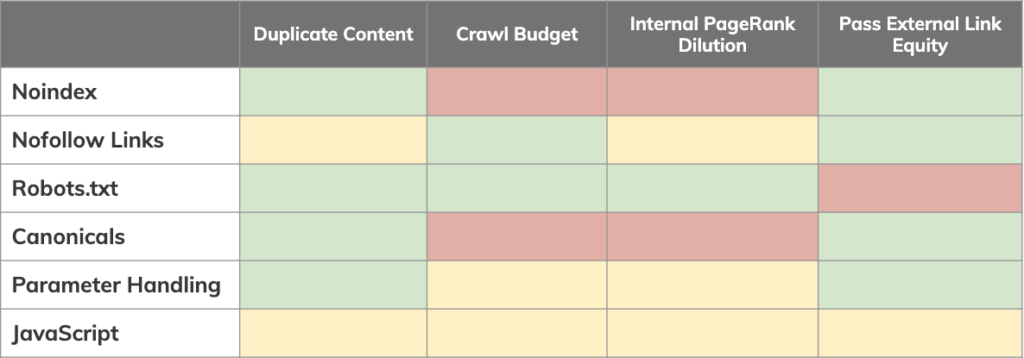

Cheat Sheet

In order to help you choose a combination of tactics, we’ve created this cheat sheet for you to find the right tools to work with when handling faceted navigation.

Now you know the tools you need to use, but where do you start?

As you will need to implement a series of tactics, it’s important to know how to prioritize these, and that will mainly depend on prioritizing your core problems. What is hurting your site more? Is it the wasted crawl budget or the diluted link equity? Based on this one answer we can recommend to take two different approaches.

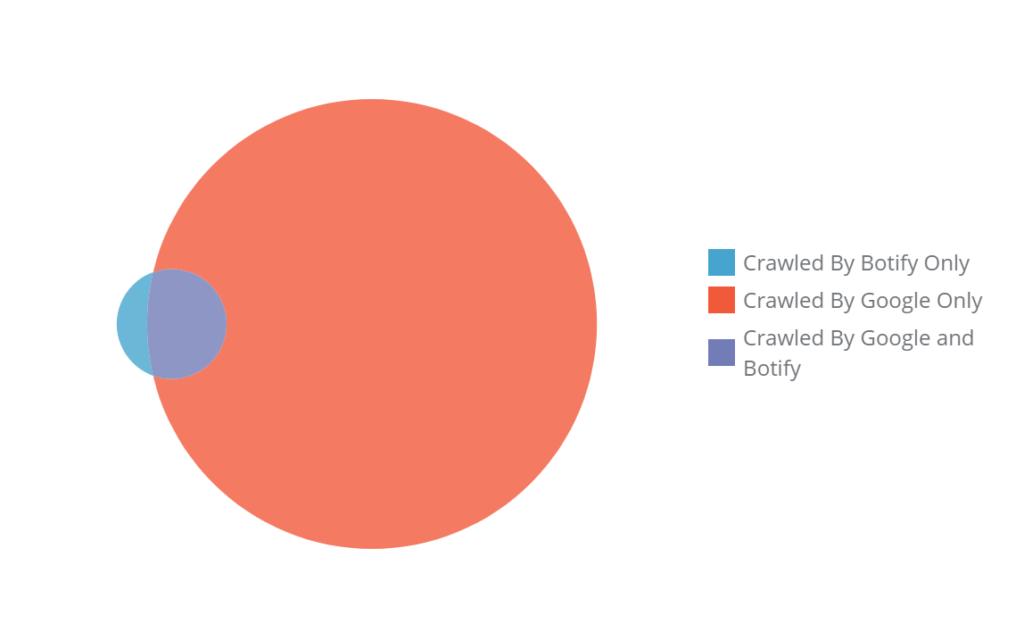

Tear it down & build it back up – tackling bloated crawl budget

If crawl budget is your core issue, then you may need to address it with strong measures. In this scenario, you’ll focus on restricting the crawl by robots.txt rules, nofollow links, and parameter handling.

What’s likely to happen is that you’ll see an increase in your crawl budget, but might end up with a high amount of orphan pages. You must then identify which one of these orphans are valuable enough (traffic, search demand, inventory) to be reintroduced into your site structure.

Client Case Study: When working with a smaller e-commerce client, introducing crawl restrictions improved crawl ratio by +20%, where the next step was to work through the 500,000 orphan pages and assess which one of these need to be re-included in the crawl.

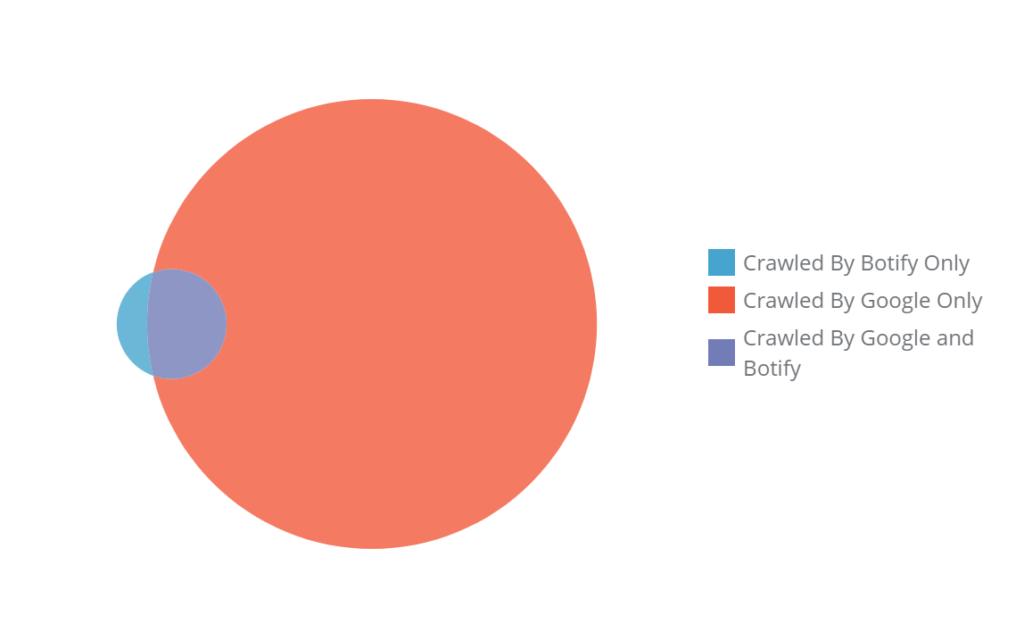

Slow & Steady – Tackle Diluted Link Equity

However, if your biggest issue is diluted link equity and duplicate content, it’s best to start with a combination of canonicals and noindex, while you can also use nofollow and parameter handling in GSC.

JavaScript usually falls under this approach, as it is more resource-intensive and requires more planning of additional internal linking opportunities for faceted pages with high search demand and inventory. Taking this approach means that robots.txt is only used for certain facets that are provenly not only waste crawl budget, but also have no significant search demand, inventory to support the page or valuable link equity.

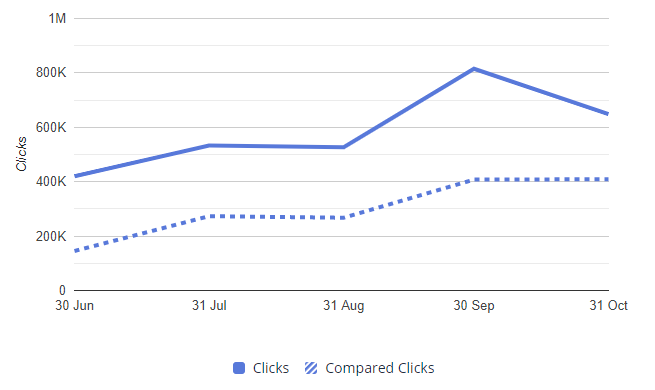

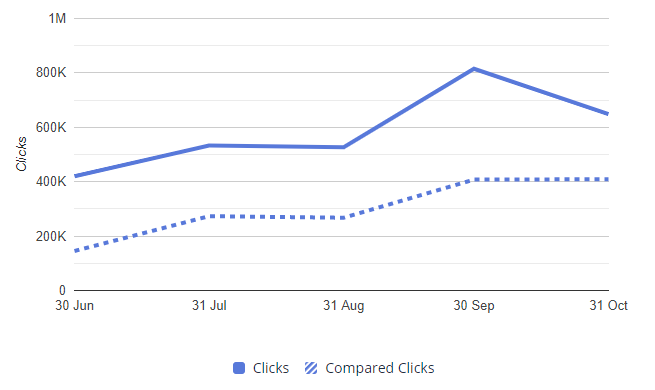

Client Case Study: When working with a large e-commerce client going through a migration, we discovered that all facets were crawlable pages, which meant that 99% of crawled pages were faceted URLs, with no parameter handling set up. During the migration, we helped the client introduce a combination of techniques including parameter handling in GSC, noindexing pages without enough inventory, nofollowing facets without enough search demand and eventually using robots.txt to limit the crawling of some facets. This slow & steady approach lead to a +95% increase in organic traffic year over year.